Go ahead and ban AI weaponry, I’m all for it — but be aware that it might not help the way you hope.

***

Yesterday, Elon Musk, Stephen Hawking, Steve Wozniak — hell, even Noam Chomsky — signed a letter, hosted by the Future of Life Institute and presented in Sydney at the International Joint Conferences on Artificial Intelligence (IJCAI), calling for a moratorium on artificially intelligent weapons.

The full letter, available here, make it clear just what they mean:

I can’t fault them for wanting to ban them, but here’s my first thought: is there really any point?

Don’t get me wrong — I think a human should always be behind the trigger. Even more, I don’t think there should be triggers, or, ideally, weapons. But since that’s not the world in which we live, let’s examine what a national-level “ban” on AI weapons development would mean.

The threat they’re aiming to stop, by their own admission, is an AI arms race — countries one-upping one another to produce weapons that can kill intelligently on their own. The major threat0, from the letter, seems to be that an AI arms race will create “autonomous weapons [that] will become the Kalashnikovs of tomorrow.” (If you don’t know, the Kalashnikov is a Russian-made machine gun with kind-of terrible accuracy that is famous for its durability and complete and utter ubiquity.) This is to say that AI weapons will be ubiquitous, and out of the hands of even (arguably) responsible governments.

What makes AI weapons worse than regular weapons? Well the big threat, again from the letter, seems to be how easy it would be to make a weapon of genocide, automatically scouting around and killing anyone that matches a certain demographic. As the letter says:

But here’s the thing: it’s going to happen anyway. Why? Because AI drones that kill people aren’t nuclear weapons.

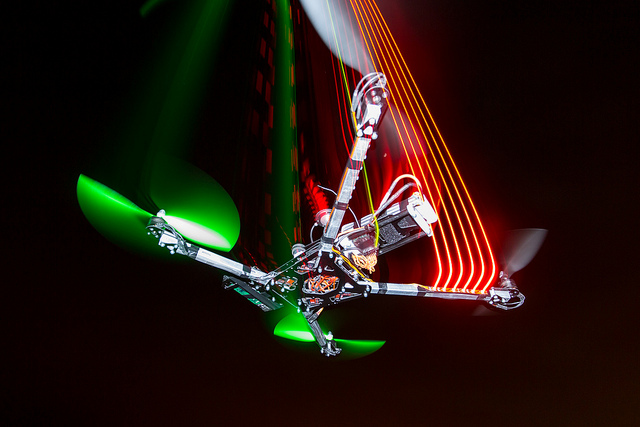

Look at the components: AI for pattern recognition is supremely useful, and so will of course continue to be developed. Corporations want to be able to profile you when you walk into a store so they know how to advertise to you. Police want to be able to automatically search thousands of video streams for a suspect’s getaway route. It’s too profitable, and won’t be banned. Drones/quadrotors/whatever-you-want-to-call-them are commercially available, can be easily weaponized, and will only get cheaper and easier to fly — meaning also easier for AI to fly. That won’t be stopped because delivery companies will want drones that can self-navigate so as to not require human pilots.

Every part of a weaponized AI drone is being independently made by for-profit companies for civilian uses. Combining them won’t be that hard.

Well, then — let’s make it a purely legal ban, you say.

Sure. Do that. That’ll probably stop more innocent civilians from dying in US drone strikes (even though enough do already that the piloted ones are bad enough). But also bear in mind that labeling genocide a war crime hasn’t, to any evidence at least, prevented a single genocide. Labeling wartime rape a war crime hasn’t stopped it from happening, either. Why? Because you don’t need the industrial and technical might that you need to build a nuclear weapon to commit low-tech atrocities.

Assassination, destabilizing nations, subduing populations — these can be done already with low-tech methods, and will be done with high-tech ones when they’re cheap and available. They’re right about that.

But given the pace of progress of the individual parts, it’s not going to be long before they are available — with or without a bunch of nations agreeing not to make them on purpose.

***

Richard Ford Burley is a doctoral candidate in English at Boston College, where he’s writing about remix culture and the processes that generate texts in the Middle Ages and on the internet. In his spare time he writes about science, skepticism, and feminism (and autonomous killing machines) here at This Week In Tomorrow.